|  |  |

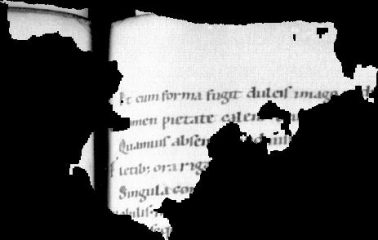

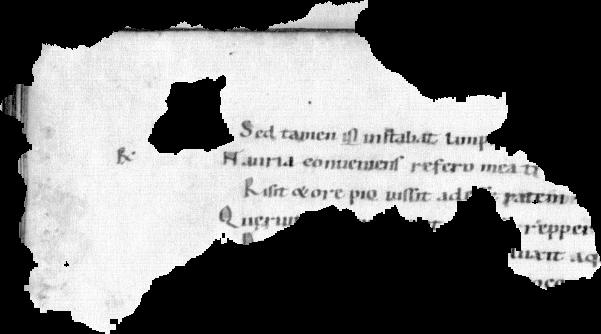

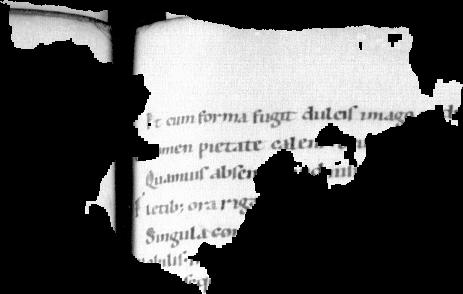

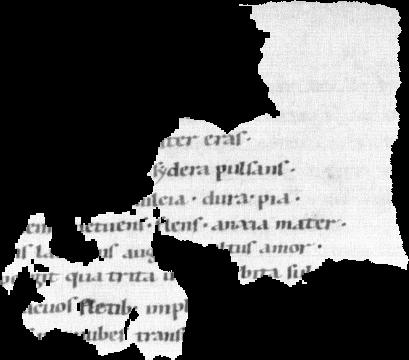

This competition investigates the performance of large-scale retrieval of historical document fragments based on writer recognition. The analysis of historic fragments is a difficult challenge commonly solved by trained humanists. To simulate fragments, we extract random text patches from historical document images. The goal is then to find similar patches of the same page or manuscript. The document images are provided by several institutions and different genres (manuscripts, letters, charters).

Task

The task consists of finding all fragments corresponding to (a) the same page (b) the same writer using a document fragment as query.

Feb 15 Homepage running, registration possible (please just drop V.Christlein a mail, you can also start anytime).

April 1 5 Providing official training set with 100k realistic fragments.

May 1 Providing evaluation test set (20k fragments) and evaluation method.

May 15 New: May 22nd: Competition deadline.

The full dataset is now available below: https://doi.org/10.5281/zenodo.3893807

It contains a training and a test set with the following image naming-convention: WID_PID_FID.jpg , where WID=writer id, PID: page id, FID= fragment id.

- Train set: contains ~100 000 fragments using the Historical-IR19 as base dataset, they should all contain some text, however some fragments are quite small.

- Test set: contains about 20 000 new fragments

If you use the dataset please cite [1].

The dataset was create by Mathias Seuret. The generation code is publicly available below: https://github.com/seuretm/diamond-square-fragmentation

- ICDAR’19 Competition on Image Retrieval for Historical Handwritten Documents, page , data, paper

- ICDAR’17 ICDAR2017 Competition on Historical Document Writer Identification, page, data, paper

- More related competitions: ICDAR’17 CLAMM, ICDAR’16 CLAMM

The evaluation will be done using a leave-one-image-out cross-validation approach. This means that every image of the test set will be used as query for which the other test images will have to be ranked. The competition will be evaluated in two ways using mean average precision (mAP): 1. On a writer-level, i.e. the goal is to find fragments of the same writer. 2. On a page-level, i.e. finding fragments of the same page

A baseline system can be found below: https://github.com/anguelos/wi19_evaluate/tree/master/srslbp . The code below https://github.com/anguelos/wi19_evaluate can also be used to evaluate your system.

- New: If you want us to evaluate your code please follow the original submission process:

- Please upload your 20 000 x 20 000 CSV file and send us the link (tipp: don’t save full float values but only some digits after the point, otherwise the file will be very large. We also accept other file formats if necessary, if you provide us the code for reading it). The CSV file is a common csv file w. first row and first column denoting the query and gallery file. The respective entries contain the per-patch distances (the smaller the more similar).

- NEW: Submission example: https://faubox.rrze.uni-erlangen.de/dl/fiGKF6PMCdH1S7Da2ULgbaAF/example_fragement_retrieval.py

- Please also send us 1/2 – 1 page scientific description of the used method.

For more information, please see (also available at arXiv after ICFHR):

[1] M. Seuret, A. Nicolaou, D. Stutzmann, A. Maier , V. Christlein

“ICFHR 2020 Competition on Image Retrieval for Historical Handwritten Fragments”, International Conference on Frontiers of Handwriting Recognition, September, 2020, pp. 216-221, doi: 10.1109/ICFHR2020.2020.00048

Organizers

Acknowledgement

This work is partially supported by the European Union cross-border cooperation program between the Free State of Bavaria and the Czech Republic, project no. 211.