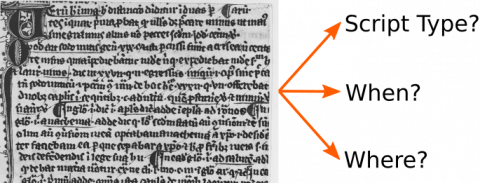

This competition investigates the performance of historical document classification. The analysis of historical documents is a difficult challenge commonly solved by trained humanists. We provide three different classification tasks, which can be solved individually or jointly: font group, location, date. Additionally, we will declare an overall winner for participants who contributed to all three tasks. The document images are provided by several institutions and different genres (handwritten and printed manuscripts, letters, charters).

Paper

https://link.springer.com/chapter/10.1007/978-3-030-86337-1_41

Tasks

- Font/Script type classification

- Date range classification

- Location classification

Timeline

- Sep 10 Homepage running, registration already possible for obtaining updates, link to the CLaMM’16 and CLaMM’17 competition datasets, as well as the font group recognition dataset for the first task (font/script type classification).

- Oct 10 Providing additional training sets for task 2

- Nov 1 Task 3 which represent the final classes. For each training set, we will provide pytorch dataset loaders for quick and easy processing. First data loaders are available here: https://github.com/anguelos/histdiads

- Nov 1 Submission of systems possible, i. e. the test set is available internally but at this point participants can hand in only full systems that will then be evaluated on our machines. A public leaderboard will be updated each month (Note: so far no system submissions happened, so no public leader board). A submission can be handed-in once a week and its result seen only for the participant.

- Mar 29 Test set will be made publicly available. Submissions of single csv files possible. Per task 5 submissions possible.

April 16April 25 Competition deadline.

Registration

https://forms.gle/3mobXxXKCjMEn3Xp6

Data

- Task 1 font group/script type classification:

- Train:

- Script type datasets: ICDAR’17 CLAMM, ICDAR’16 CLAMM (you find the datasets at the bottom of ‘registration & download’. Also note that some parts of the CLAMM’17 training data uses data from the CLAMM’16 data)

- Font group dataset: https://doi.org/10.5281/zenodo.3366685 (note: The test set will contain only one font group but this dataset also provides multiple labels. It’s always main_font[,other_font,other_font,…] . If there is no main font group it is denoted with ‘-‘, i.e. the first column will have a ‘-‘.)

- New Test:

- Task 1a, font groups: https://zenodo.org/record/4836551

- Task 1b, scripts: https://zenodo.org/record/4836659

- Train:

- Task 2 date classification

- New train + test dataset: https://zenodo.org/record/4836687

- Dataset contains dates/date ranges, goal is to get as close as possible to the date ranges, i.e. mean absolute error of estimated date to the date range

- gt.csv: notBefore,notAfter,filename

- File names: imageNumber_notBefore_notAfter.jpg

- additional allowed train dataset: ICDAR’17 CLAMM

- New train + test dataset: https://zenodo.org/record/4836687

- Task 3 location classification

- New train : https://zenodo.org/record/4836707

test: https://zenodo.org/record/7849576

- New train : https://zenodo.org/record/4836707

Note: All test pages of Task 1 (fonts) contain a known font (i.e., no “not a font”, and no “other font” labels are present), and that there is a single font on each test page.

These are the lists of classes:

Task 1a, scripts:

Caroline, Cursiva, Half-Uncial, Humanistic, Humanistic Cursive, Hybrida, Praegothica, Semihybrida, Semitextualis, Southern Textualis, Textualis, Uncial

Task 1b, fonts:

Antiqua, Bastarda, Fraktur, Gotico Antiqua, Greek, Hebrew, Italic, Rotunda, Schwabacher, Textura, Not a font (not present in test set), Other font (not present in test set), “-“, i.e., not main font (not present in test set)

Task 2, dating:

There are no classes, only date ranges given. You are free to create your own date (range) classes. However note that we will use MAE between ground truth date range and your suggested date for the evaluation.

Task 3, location:

Cluny, Corbie, Citeaux, Florence, Fonteney, Himanis, Milan, MontSaintMichel, Paris, SaintBertin, SaintGermainDesPres, SainMatrialDeLinoges, Signy

Evaluation

The competition winners will be determined by the TOP-1 accuracy measurement (in case of dating: 1-MAE). The overall strongest method will be defined as the sum of ranks in all competitions. While the participants will have to deal with an imbalanced training set, we will try to balance the classes of the test set.

Submission

Each participant can participate in any subtask but we encourage to evaluate on all tasks since they follow nearly all the same classification protocol (exception is somewhat the dating task, however you are free to phrase it as a classification task, too). That means that only the classifier/classification layer needs to be adjusted to the different number of classes. Participants who submit a system can optionally participate in the competition anonymously.

Please send us (Mathias and Vincent) your results in form of CSV files in the following format: filename,class (or in case of dating: filename,date). You can submit up to 5 different CSVs per task.

Additionally, please send us 1/2 page description of your method (also containing team member names) and the info if you are fine that we also publicly show the results on this competition page (can also be published anonymous with a team name).

Submission of systems: possible 1st of November 2020

Leaderboard

| Team name | Overall Accuracy [%] | Average Accuracy (Unweighted Average Recall) [%] |

| PERO (4) | 88.77 | 88.84 |

| PERO (5) | 88.46 | 88.60 |

| PERO (2) | 88.46 | 88.54 |

| PERO (1) | 83.04 | 83.11 |

| PERO (3) | 80.33 | 80.26 |

| The North LTU | 73.96 | 74.12 |

| Baseline | 55.81 | 55.22 |

| CLUZH (3) | 36.86 | 35.25 |

| CLUZH (1) | 26.11 | 25.39 |

| CLUZH (2) | 22.29 | 21.51 |

| Team name | Overall Accuracy [%] | Average Accuracy (UAR) [%] |

| PERO (5) | 99.04 | 98.48 |

| PERO (2) | 99.09 | 98.42 |

| PERO (4) | 98.98 | 98.27 |

| NAVER Papago (3) | 98.32 | 97.17 |

| NAVER Papago (2) | 97.86 | 96.47 |

| PERO (1) | 97.93 | 96.36 |

| NAVER Papago (4) | 97.69 | 96.24 |

| NAVER Papago (5) | 97.64 | 96.01 |

| NAVER Papago (1) | 97.64 | 95.86 |

| PERO (3) | 97.44 | 95.68 |

| CLUZH (3) | 97.13 | 95.66 |

| CLUZH (1) | 96.84 | 95.34 |

| CLUZH (2) | 96.80 | 95.16 |

| The North LTU | 87.00 | 82.80 |

| Team name | Mean Absolute Error [y] | In Interval [%] |

| PERO (2) | 21.91 | 48.25 |

| PERO (4) | 21.99 | 47.97 |

| PERO (3) | 32.45 | 39.75 |

| PERO (1) | 32.82 | 40.58 |

| The North LTU | 79.43 | 28.97 |

| Team name | Overall Accuracy | Average Accuracy (UAR) | |

| PERO (5) | 79.69 | 79.69 | |

| PERO (3) | 75.08 | 75.08 | |

| PERO (1) | 74.77 | 74.77 | |

| PERO (4) | 70.77 | 70.77 | |

| PERO (2) | 69.85 | 69.85 | |

| Baseline | 63.12 | 62.46 | |

| The North LTU | 43.69 | 43.69 |

Organizers

- Mathias Seuret

- Anguelos Nicolaou

- Dominique Stutzman

- Nikolaus Weichselbaumer

- Martin Mayr

- Andreas Maier

- Vincent Christlein

Acknowledgement

This work is partially supported by the European Union cross-border cooperation program between the Free State of Bavaria and the Czech Republic, project no. 211.