Endoscopy – Part 2

These are the lecture notes for FAU’s YouTube Lecture “Medical Engineering“. This is a full transcript of the lecture video & matching slides. We hope, you enjoy this as much as the videos. Of course, this transcript was created with deep learning techniques largely automatically and only minor manual modifications were performed. Try it yourself! If you spot mistakes, please let us know!

Welcome back to Medical Engineering. Today we want to spend a little more time in endoscopy and in particular we want to look at future developments and the latest state-of-the-art in the field and how this may impact the surgeries of the future.

This is endoscopy part two and what do I have for you? Well, the first thing that I want to show is a kind of set of tools that are assembled into a technique that is called natural orifice transluminal endoscopic surgery.

So, this is a kind of intervention program where you try to induce as minimum scars as possible. So, you want to use essentially natural orifices like the mouth, anus, and so on and enter the body through these keyholes. Then you want to go ahead and do the incisions essentially in the stomach or, in the colon to access the site that you want to actually perform your treatment on. So, this is still in an early experimental stage, but it is already allowed to be performed in humans. In practice, it is basically performed using hybrid techniques where you then use additional technology in order to help with the navigation.

So, you can see NOTES has a couple of advantages. You need less anesthesia because there’s less pain in the stomach. Then of course you have an even shorter recovery time. So that’s another big advantage of this kind of technology and you don’t have any external scores. On the other hand, there are also a couple of disadvantages. In particular, the unsterile access points, when you do the incisions in the colon, and so on. So, this causes a bit of trouble, and another one that you must have very flexible endoscopes because you have no stable abutment, and also the navigation can become very difficult. So, there are lots of challenges with NOTES and therefore you need then additional navigation. We will see that for example with 3D and the scopes later in this video.

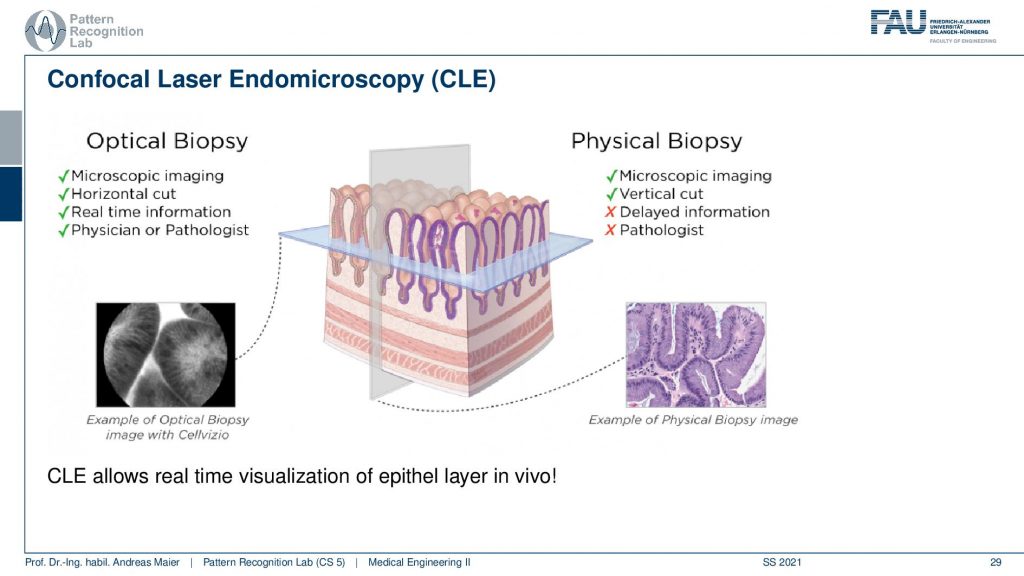

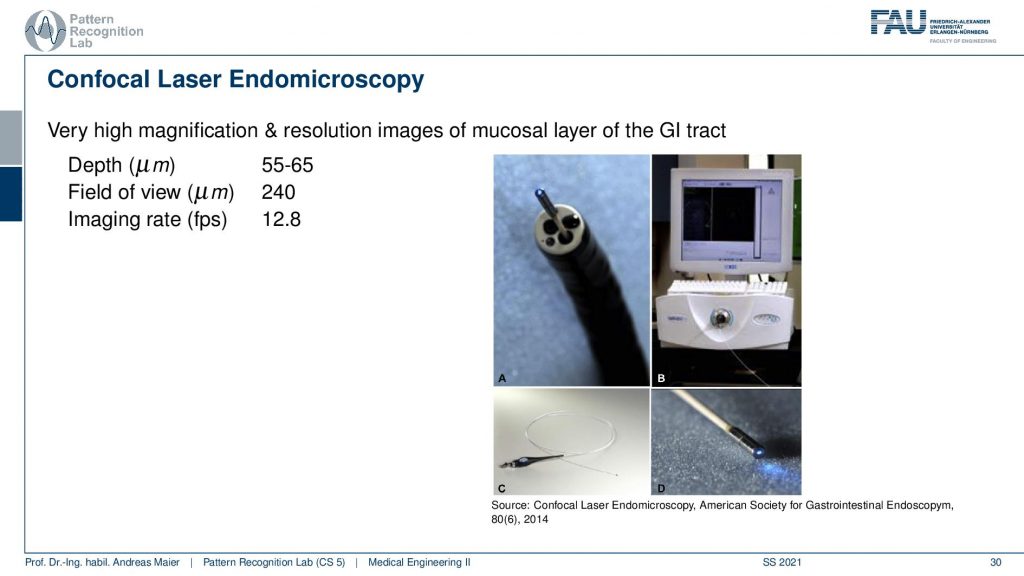

Another very interesting technique that I want to show to you is the so-called Confocal Laser Endomicroscopy, the CLE device. This is essentially able to perform what is called optical biopsy inside the patient. So typically, if you perform a biopsy what you do is you go inside the body you take out a small piece of tissue. This tissue then has to be taken out of the body. It must be stained and it has to be prepared in a very elaborate process. Then you get an image like this one and here you can then identify different cells you can identify cancer and you can really go into the cellular range and identify any processes that go wrong on this level. Obviously, this takes a little longer because you have to take out the tissue, you have to stain it, you have to cut it you arrive to run the microscope, and so on. So, this is kind of slow but of course, it allows you to get insights that you can’t get in vivo. Now the CLE is a kind of idea to do that inside of the body. So, this takes then views that are essentially also on the microscopic level. So, you will be able to differentiate individual cells. This is a very exciting technology.

So typically, you have a field of view of about a quarter millimeter and then you can also image up to a depth of approximately 50 micrometers. So, the imaging rate is approximately 12.8 frames per second. You can see this is why I already hinted at the device channel here in our flexible endoscopes. So, you can see this is the tip of the CLE device and here you can see the light coming out. So, you can see this small light here is actually where the imaging is done and this is the flexible endoscope where you’re putting your CLE probe through. So how does this work?

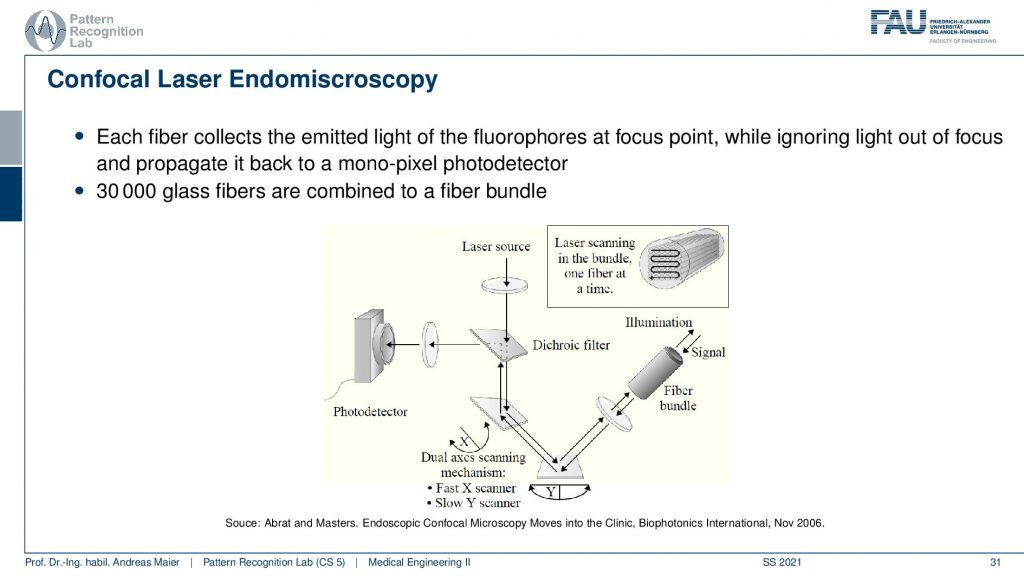

Well, it works the following way you have a laser source. The laser source is sent through two mirrors and then through a fiber bundle in order to reach the scene. Then, of course, the light is reflected and it’s returned over the two mirrors then you have the dichroic mirror and it is reflected here to a photodetector. So this way we can then start scanning and with these two mirrors here we can change the x and y-direction such that we can rasterize the fiber bundle and sample all of the pixels in the image. This allows us then to produce images that will be able to show individual cells.

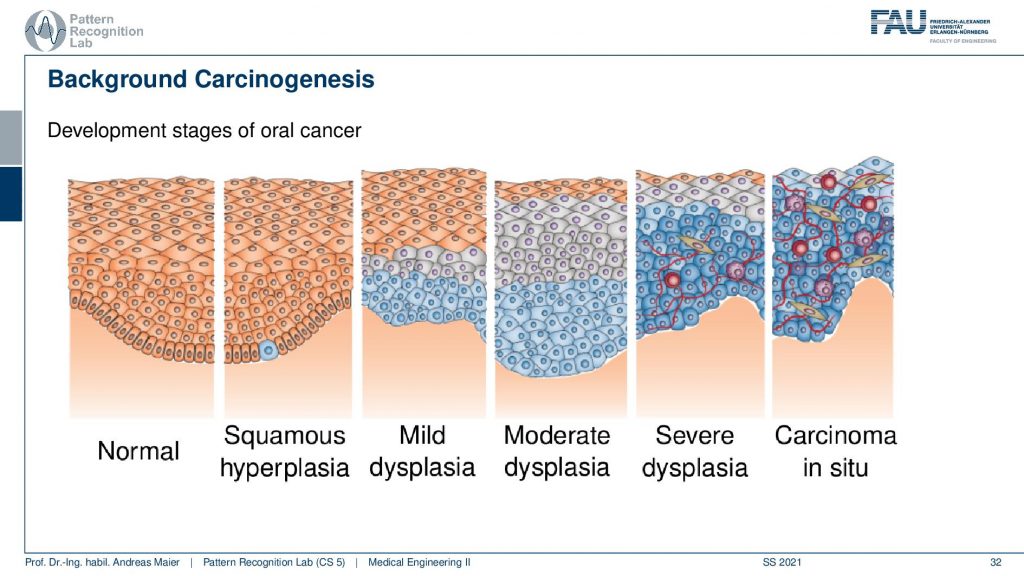

So to understand a bit what we’re seeing on these images let’s first think about what we’re going to expect in the mucosa that is the epithelium. These are exactly the cells that we are interested in and you typically find them in your mouth or you would also find it inside of your colon and the gastrointestinal tract. So what is happening when cancer is developed the tissue changes from a normal state as you can see here to a small kind of swelling and then you get mild dysplasia, moderate dysplasia up to severe dysplasia. Here you can see that the structure of the cells breaks down and when you develop a carcinoma in situ the cell formation is very different and you get these very irregular structures. Because the cell shapes change and the tissue is breaking down, you have this swelling and the tumor growing.

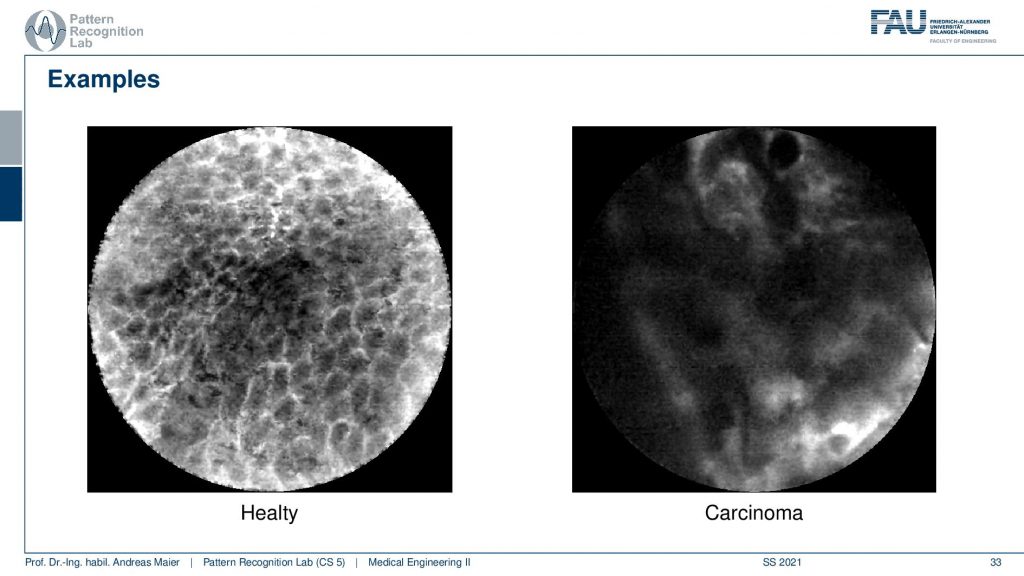

Now, this can also be visualized in the CLE images and you can see this on the two images here. So on the left-hand side, you see a regular epithelial kind of cell tissue and you see that you can still outline individual cells here. So you see the gaps between the cells and this is a regular mucosal epithelium. Now if you have carcinoma you see that there is essentially no cell delineation. You just see those black blobs and then you see stuff like this one here so it’s very hard to see anything on such an image. This happens if you have a carcinoma. Now interesting about this is that we can image this inside of the patient. So for example if we suspect that a nodule is cancerous we can use the CLE probe in order to investigate whether this is just regular tissue and will just disappear on its own or whether it’s really a cancer and has to be taken out. Also, it’s very useful if you want to figure out how much tissue you have to remove in order to have really taken out everything that is already cancerous and needs to be removed. One thing that you still should keep in mind when you are imaging with CLE the reason why we see actually the gaps between the cells is because we are using a fluorescent dye. This is administered to the patient and of course, this fluorescent has to go into the patient first before you can see these very neat cellular structures here. So there’s also a little downside when you want to use the CLE endoscope you have to use this contrast agent or die. This is why imaging is also not so easy. But this kind of technology is already emerging and a lot of potential applications have been identified that are currently being investigated in research projects.

Another very important topic is 3D endoscopy.

3D endoscopes could be a game-changer for very complex interventions like the NOTES one that we’ve seen earlier. Here the idea is that we want not just to see the inside but we want to get really a 3D reconstruction of the tissues inside of the body.

So in a 3D endoscopy, we actually have a technique where we acquire metric range data. So depth data like surfaces or point clouds of the surgical site with an endoscopic intervention. This range data then deliver topographic information about the observed scene. It can be acquired using different techniques and we will look into a couple of those in the following and discuss their advantages and disadvantages.

So generally an advantage of the 3D endoscope is that it allows metric measurements because it’s calibrated. We can then also measure distances in millimeters and so on. You get on top a mesh representation of the field of view and this then allows intuitive visualization. So we can change your viewpoint artificially. So if you look from one side then you can turn the scene and move to a location that is physically actually not accessible. So that’s also a huge advantage of using techniques like this and because of your metric, you could also avoid collisions. This is also very important for computer guidance and in particular for keyhole surgeries. Let’s say you’re operating on the brain and you don’t want to damage certain areas then you can couple this with computer guidance which then will avoid collisions with any tissue at risk. There are of course disadvantages. It’s kind of early-stage they’re already commercially available endoscopes around but it’s not used in all interventions. Also, you may suffer from specular highlights and sometimes it’s really unpopular for surgeons. They’re used to the tools that they have. They have the kind of idea in their head how they have to perform the surgery. If you don’t develop the tools in a way that they are so intuitive that they really facilitate the intervention then also these devices are not so popular with their users. If you build something that is not really useful for your user then even if you put in all the latest technology and the fanciest stuff nobody will use it. So this is also key when you finish your studies and you go on developing medical products. You always have to talk to your medical users, to your surgeons, to your radiologists, and so on. If you develop something that is not of immediate use for them they will simply not use it. So you have to build things in cooperation with the medical users such that they are accessible, usable, and have user interfaces that will then also facilitate the actual treatment.

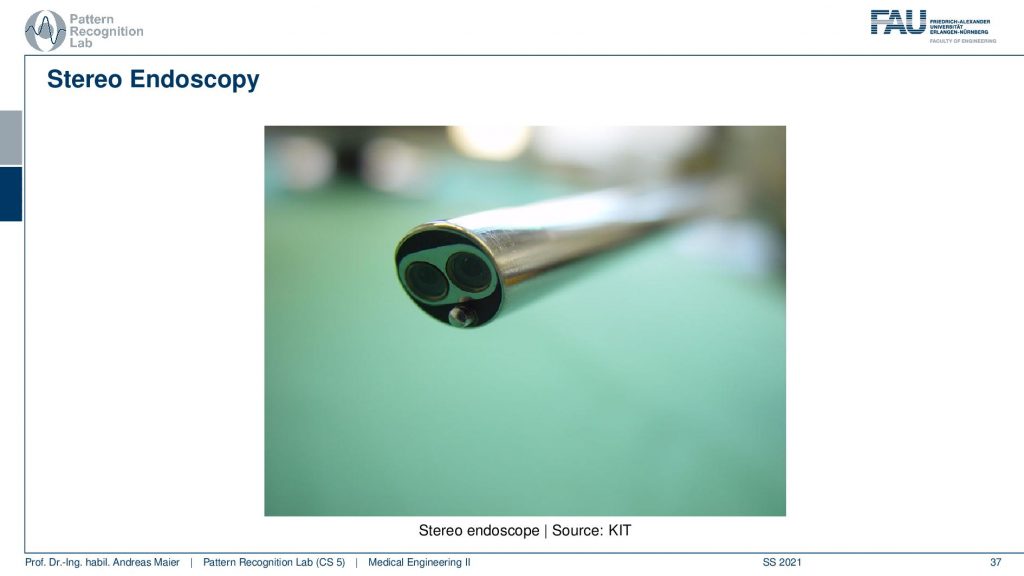

Now let’s have a look at one type of 3D endoscope and this is the stereo endoscope. You can see here this is a rigid endoscope and you see that now we have two optics inside of the rigid endoscope which allow us to build a stereo basis. With this, we can then reconstruct surfaces from the two images. So this is very similar to what you’re doing as a human with two eyes you are able to perceive depth and can orient yourself in the 3D space.

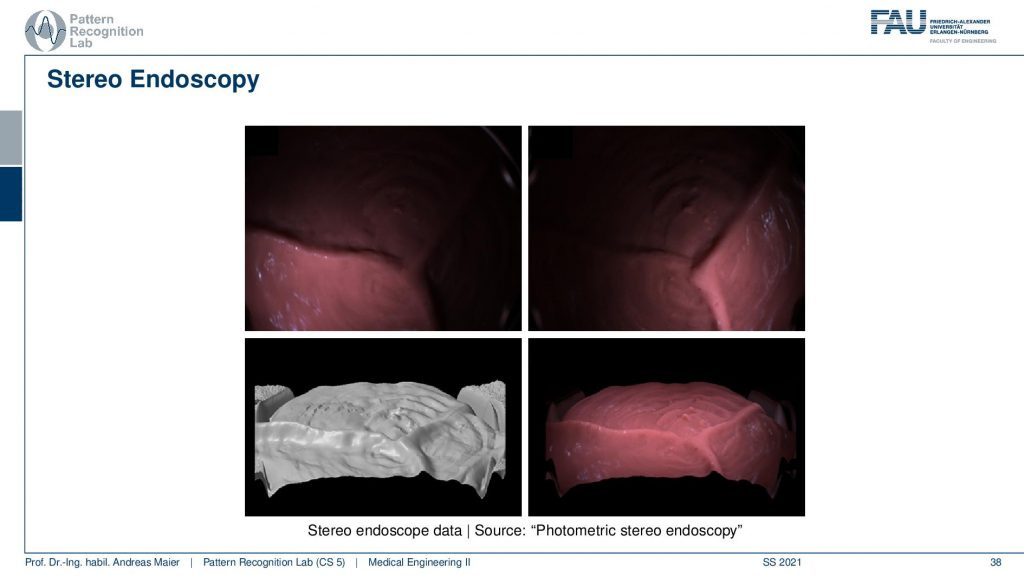

So these are some results how the actual reconstruction looks like. So here you see the reconstruction of the surface in grayscale on the bottom left and then different renderings and artificial views on the other three images of the same surface. One thing that you have to keep in mind if you want to use technologies like this one, you will need point correspondences in order to be able to reconstruct the surface data. So you need to find essentially small points and their corresponding point in the other image in order to estimate depth. So this is a key problem of stereo vision.

This brings us now into a short summary here. The advantage is that you have high definition and endoscopes already available. It’s certified and commercially available and it has a high range of data accuracy. So the depth is very accurately computed and also you get color information RGB and depth range information at the same time. Yet the range quality and range image resolution depending on the texture. So if you’re imaging something that doesn’t have any texture like a white wall or, a uniform organ you will not get any depth information. So this is a huge problem and also the feature matching is computationally expensive. So these are drawbacks of stereo endoscopes. Well, what could we do about the dependence on the texture?

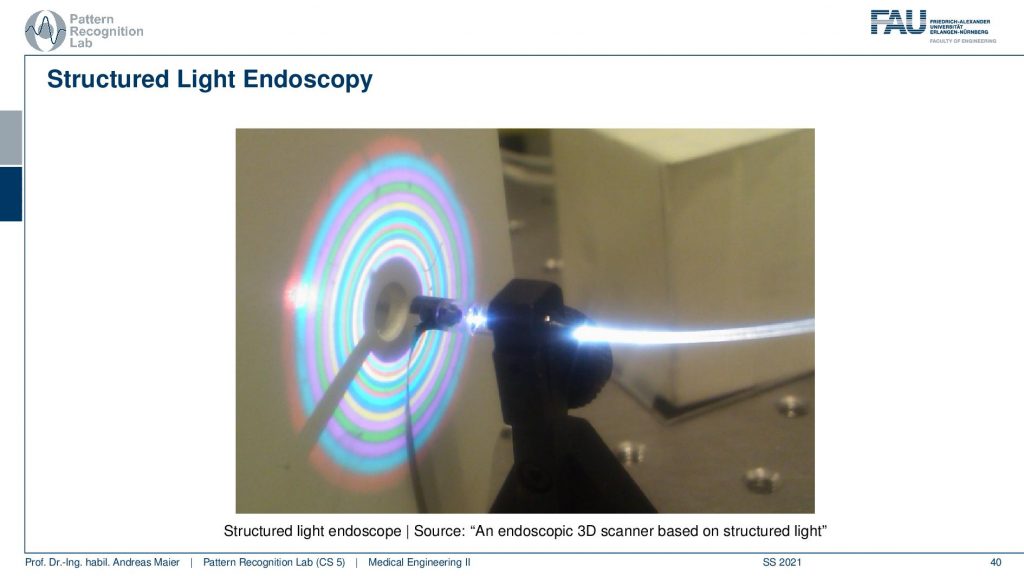

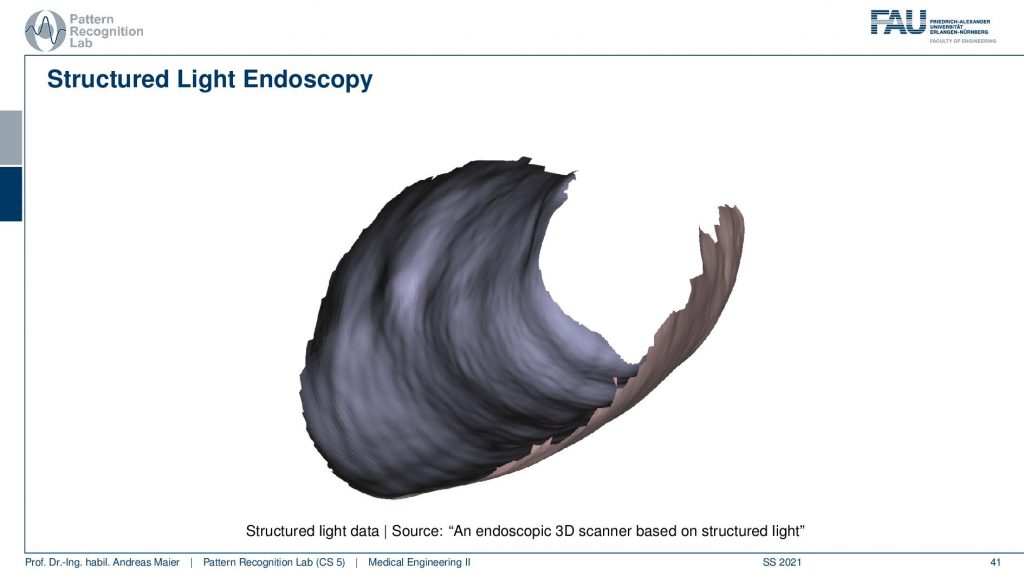

Well if there’s no texture in the scene we could just bring the texture in there and we can do that for example with structured light. So here you apply an additional light source that is able to emit controlled light like the rings that you see here in this colorful pattern. Because we know the pattern and we can change it we can essentially introduce a kind of texture into the scene that will then allow us to find point correspondences. With this, we can then very accurately reconstruct surface data.

You see an example here from a structured light sensor. Now a problem with this technique is because we are using the color information to encode the texture in there we kind of don’t get color information but only depth information with this kind of approach.

Well, this is a prototype there are other ways around it. But that’s a clear disadvantage. Yet we get very accurate range data and we are close to independent of the actual texture inside of the patient. So this is a very useful tool but then again there are also problems with low range image resolution. So this is also one of the reasons why this is essentially a subject of research. There are people working on this but this is not available as a clinical product.

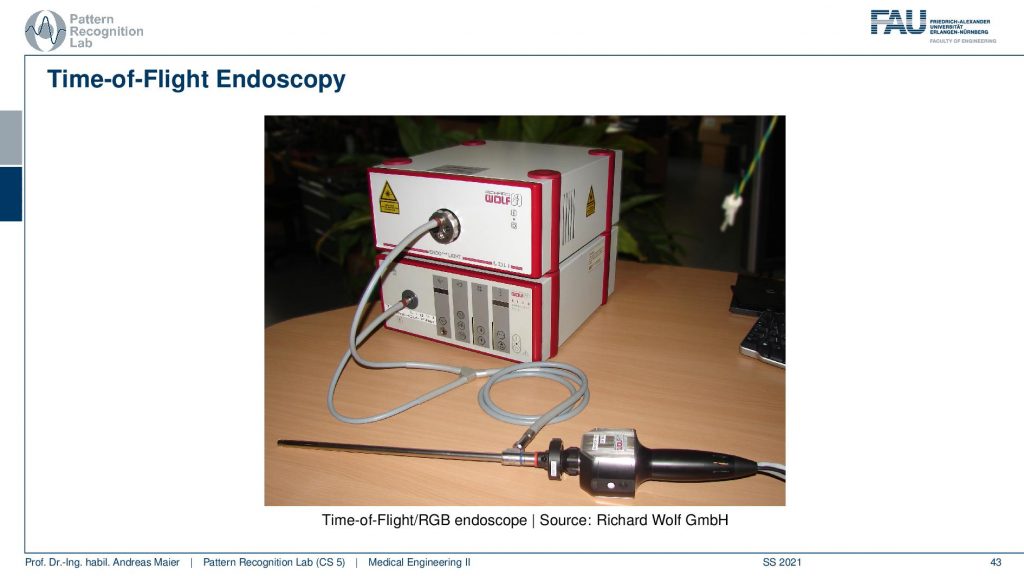

A third alternative that I want to show you here is the so-called time-of-flight endoscopy. Here a different sensor is being used the so-called time-of-flight sensor. This is actually a type of sensor that comes from automotive developments. So these types of sensors have been developed for helping cars to park autonomously. So they can be used for example to measure a parking space and then have your car automatically fill the gap in between two other cars. Now with this kind of sensor, you can acquire 3D information at every pixel of the sensor. It’s essentially done by sending a modulated wave into the scene and this modulated wave is then reflected by the scene and collected by the camera and because you measure the shift in phase you can compute how far away the point of reflection was which gives you immediate depth information. So this is why it’s called the time of flight because you’re actually measuring how long the light takes to the object and back in order to get the depth information. You may know these sensors because of the popular Kinect 2 Xbox pro body game controller. These devices have been actually used and this is also something that many research groups have actually been using. So they bought the Xbox Kinect 2 sensor because then they had sensors that they could experiment on or maybe not for the endoscopy but of course for many many other applications that are a very inexpensive sensor. So today you can buy sensors like this one. They are mass-produced for general-purpose cameras and they will be around 200 euros. In this particular setup, we have this spatial light source attached to our endoscope. So we’re using the optics of the endoscope to bring infrared light into the body. It has this special pulse format that allows us to compute the time of flight and with that, we get dense 3D information independent of texture. So very cool technology and I’m quite proud because this is actually a development where our group has contributed quite a bit.

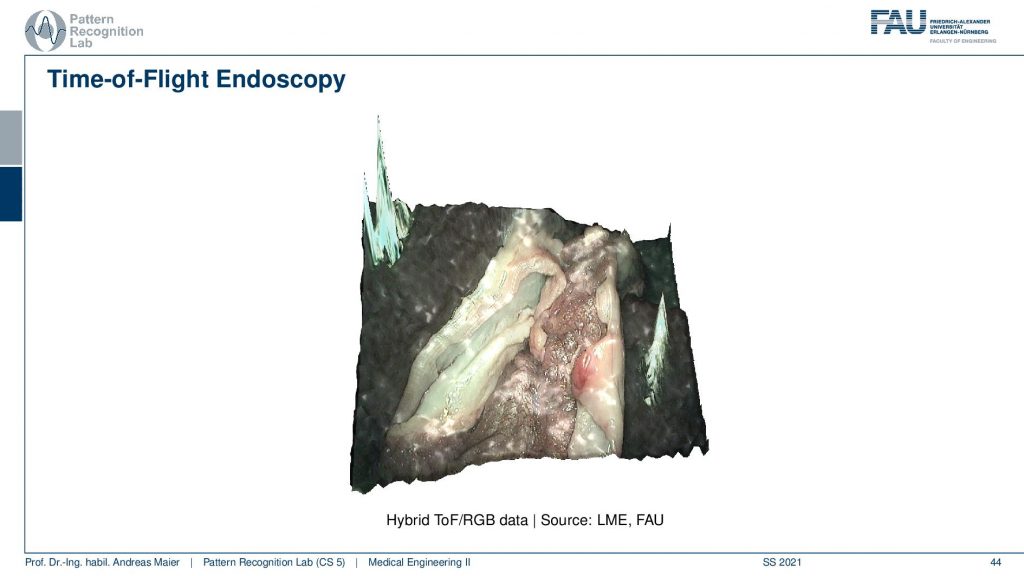

So what comes out of this is an image like this one. So here you see this example you can get the color information, you can get the surface information. One disadvantage of the sensor is that the resolution of the image is not that great and the other thing is that the depth is very noisy.

So the time of flight sensors have very noisy properties and therefore you have to apply a lot of denoising techniques and you can actually see that in the outer parts of the image you see those artifacts here and here. So this is actually artificial and we were not successful in filtering this out here. Still, you get a very good image impression even inside of the patient in this area of the image. So that’s pretty cool! There has been researched on it was also a very nice project founded by the german research foundation because it has a lot of advantages. You have the constant range image resolution, you have complete independence on texture information and you get color and range information. So depth information in a single shot. That’s pretty cool! You get that at high frame rates as well. The disadvantages are the low signal-to-noise ratio. So there’s a lot of noise in the sensor which is kind of problematic and so far this has only been used as a prototype.

Well, what other developments have there been?

The key development is computer assistance and this is also something that can dramatically increase the safety and also the patient benefit.

I want to show you a couple of systems that use computer assistance. So in the computer systems you generally then have a minimally invasive procedure and you combine the visualization data overlays in order to guide the interventionalist. Often this is then also associated with robots. The robot then can be programmed in a way that it will not move into areas of risk and because you have very good vision and you can measure depth information then you are also able to steer the guidance and to put up those safety margins. So that’s pretty cool and actually, in neurosurgery, this is kind of popular.

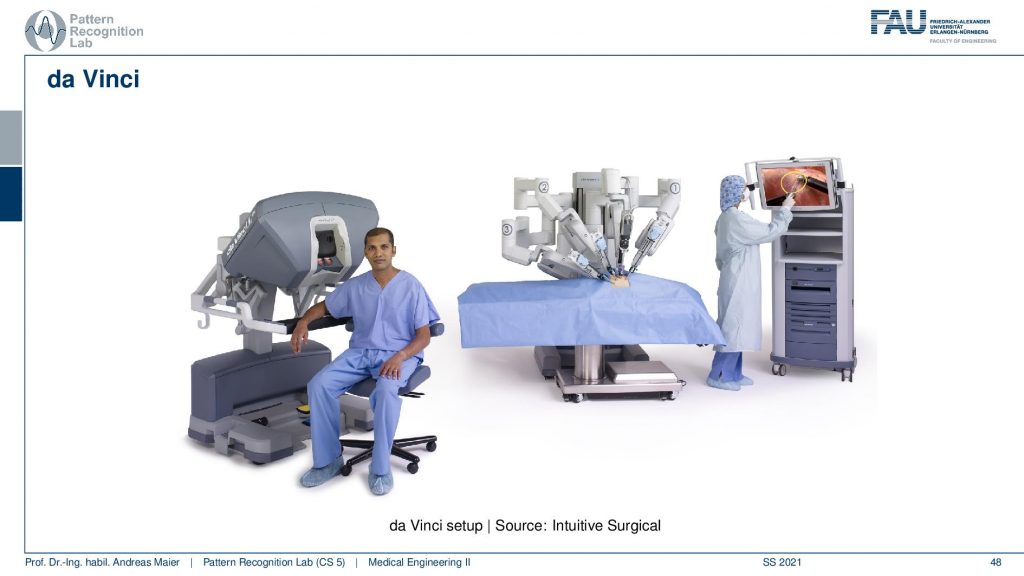

One of the devices that are being used is the da Vinci system. So this is an Intuitive Surgical system you can see here on the left-hand side this is the interventionalists console. So you can essentially put your head in here and then you have controls here that allow you to steer the robot. It actually has stereoscopic vision so you even have a perception of depth. Then you can control essentially these robotic arms here in order to perform the intervention. It is used actually quite frequently in neurosurgery.

So this da Vinci system has already been approved in 2000 by the FDA and there have been already 200,000 interventions in 2012. About 2 000 units were sold until January 2013 and it really became slowly a popular device although a single unit a single machine costs up to two million US dollars plus maintenance fees. There used to be a competitor called ZEUS but it was discontinued in 2003. So since 2003, it’s essentially Intuitive Surgical that is running the entire market of those robots. It provides a complete 3D stereo vision and intuitive controlling and the newest versions even add simulators with virtual tutorials force feedback and so on. So in theory this would even allow distant operations and it has been performed already in 2001. But keep in mind latency is a key issue here.

There has also been a bit of criticism and there are high costs for the hospitals, the surgeons have to learn to control the system. But actually, the running rate is pretty high. So people can get acquainted with the systems pretty quickly. A disadvantage is also that software is proprietary and cannot be modified. So if you want to work with these systems in research you have to work with the vendor. So far there are very few studies that have shown the real benefits for the patients. But the surgeons really like the device because it’s really intuitive surgical. It’s intuitive to use and as you’ve seen already there’s no real competitor. There is only Intuitive Surgical that is manufacturing those devices. Now why is the thing so popular and why is it called intuitive?

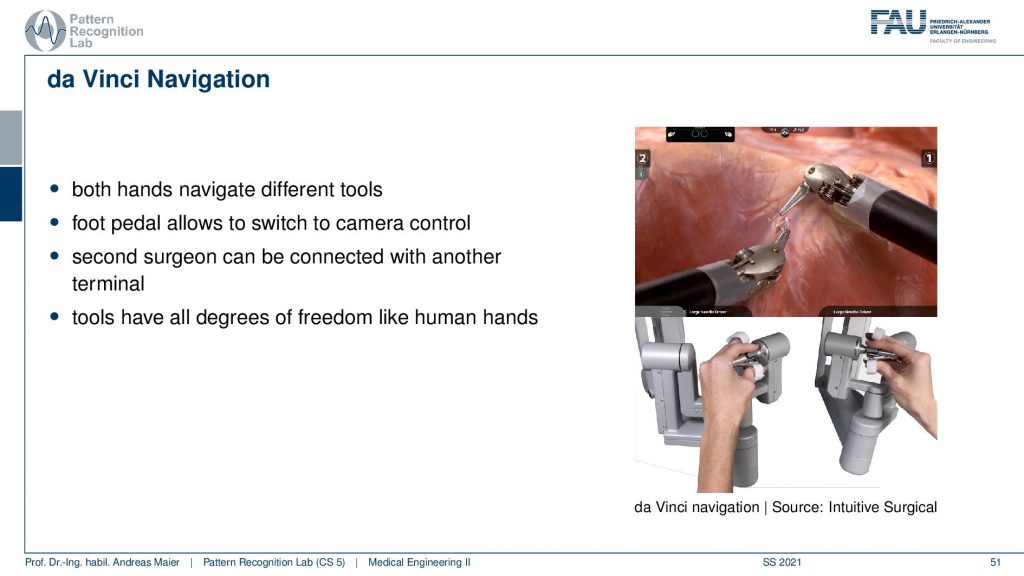

Well, you have this kind of grips here where you place your hand and then you can immediately control the hands of the system, the robotic hands. The nice thing is that the magnification is done by the system. So if you look into the system it will appear as that everything is approximately in the right size as you’re used to it. So you get very very quickly very intuitively how to use the system. So use both hands to navigate the tools. You have foot pedals to control the camera and you can even have a second surgeon to be connected with another terminal and then perform interventions together. A very very cool feature is that the control units essentially have the same freedom as human hands. So you can grip, you can move around and if you play with the system already after maybe five minutes then you already get used to it so quickly that you can already perform first cuts. You can move stuff around you can even try in a simulator and let’s say with some soft tissue simulation you can do the cuts and then try to flow from one hand into the other. You’ll see that this is very very intuitive because it almost feels like a part of your body and in particular with force feedback, it gets even more realistic. So this is why the device is really popular with people who have been using it. If you ever have the chance to use one of those systems just for five minutes or ten minutes go ahead, go to the simulator, go to the experience booth at an exhibition try it out. If you have the chance to go and visit Johns Hopkins I would definitely try to get an experience with one of the systems there. So this is really a great tool and I can definitely recommend having a look at it. It’s magnificent engineering. Imaging devices and all the things have to come together to build things like that.

Okay so let’s have a look at a couple of future trends and a couple of ideas about what might be coming up in the close to distant future.

Of course, something that is around is fully automated procedures.

But this is still pretty far away in particular because of legal issues. It’s the same problems that you have in autonomous driving. So who takes the liability? How can this be ensured? There are really ideas around and people would like to do that but I think this is still some time ahead. So automated procedures may not be happening so quickly. Other things are things like real-time 3D reconstruction and augmented reality. This is really something that is close to being clinically applicable and I think these techniques will still have quite a bit of impact on how you perform surgery. There are also ultra-thin endoscopes that might be a relevant development. Also, NOTES is a very interesting approach yet the navigation is pretty hard but sometimes you have to bring all these things together in order to make a point why you would want to go ahead with such a complicated procedure. Of course, there are quite a few benefits if you would be able to perform these procedures really safely. Data fusion becomes more and more important not just the interventional data fusion like different sensors but also with prior data like CT scans from prior to the surgery and also population data such that you can really build personalized models. Of course, techniques of machine learning and deep learning are on the rise and they will also impact the field of endoscopy. So finally what is a very likely development is of course higher image resolutions and improved quality data and there are already improvements on the time of flight sensors and there is super-resolution and I actually have a small video here of what can be done.

Well of course if you have any questions then you can either send them on our learning platform or you can contact me on social media. You can also send them by email.

I can recommend our textbook which you can download for free. It’s completely open access on the Springer website and it’s not just for our university so everybody in the whole world can download this. So go ahead have a look at the textbook. You don’t have to buy it and if you don’t like it just delete it. Just click the link and have a look at our textbook.

Well, this brings us already to the end of this video. So you’ve seen the various future trends and new developments that are currently still part of the research. Some of them already have been translated to clinical use like the da Vinci system and other kinds of a growing sector in the field. So you see all the new developments they still change the clinical routine. If you’re a clinician, a surgeon, and if you learned your profession 20 years ago, you have to adapt all the time to know the newest trends, the safest surgeries, and the kind of treatments that are most beneficial for your patient. So this is something that you really have to keep in mind in medicine. This is an always-changing field, there are always new insights and people typically also like to contribute to these developments. You will find that many of the medical doctors they’re very open to research. Therefore it’s very good that we have people like medical engineers around that kind of understand all of the technology but also have a fundamental understanding of the medical reasons the anatomy such that the interdisciplinary communication can work out. So I hope you liked this little video and this is the last video about endoscopy. In the next video, we will still talk about optical measurements and in particular, we want to focus on microscopy. So thank you very much for watching and looking forward to seeing you in the next video. Bye-bye!!

If you liked this post, you can find more essays here, more educational material on Machine Learning here, or have a look at our Deep Learning Lecture. I would also appreciate a follow on YouTube, Twitter, Facebook, or LinkedIn in case you want to be informed about more essays, videos, and research in the future. This article is released under the Creative Commons 4.0 Attribution License and can be reprinted and modified if referenced. If you are interested in generating transcripts from video lectures try AutoBlog

References

- Maier, A., Steidl, S., Christlein, V., Hornegger, J. Medical Imaging Systems – An Introductory Guide, Springer, Cham, 2018, ISBN 978-3-319-96520-8, Open Access at Springer Link

Video References

- Applications of Confocal Endomicroscopy in Colon Polyps https://youtu.be/hcyRXqCIqp0

- Quantitative 3D Endoscopy using a Time-of-Flight Camera https://youtu.be/PkxGd7bGpcM

- Precision da Vinci Robotic Surgery Peels Grape Skin https://youtu.be/cpPofyZbvDw