Hendrik Schröter, M. Sc.

- Since 02/2019:

Ph.D. researcher in the speech processing group at the pattern recognition lab with a research focus on signal processing and speech enhancement. - 04/2016 – 12/2018:

M.Sc. student (computer science) at Friedrich-Alexander-Universität Erlangen-Nürnberg, with focus on pattern recognition. - 10/2011 – 09/2014:

B.Eng. student (mechatronic engineering) at Duale Hochschule Mannheim, working student at Schenck Process GmbH.

Professional career:

- 10/2014 – 04/2016:

Software developer at Schenck Process GmbH, Darmstadt, Germany.

A non-exhaustive list of tasks included data base development, processing of measurement data, designing and implementation of custom interfaces to customer data stores as well as UI development.

2019

-

Deep Learning based Noise Reduction for Hearing Aids

(Third Party Funds Single)

Project leader:

Term: February 1, 2019 - January 31, 2023

Funding source: IndustrieReduction of unwanted environmental noises is an important feature of today’s hearing aids, which is why noise reduction is nowadays included in almost every commercially available device. The majority of these algorithms, however, is restricted to the reduction of stationary noises. Due to the large number of different background noises in daily situations, it is hard to heuristically cover the complete solution space of noise reduction schemes. Deep learning-based algorithms pose a possible solution to this dilemma, however, they sometimes lack robustness and applicability in the strict context of hearing aids.

In this project we investigate several deep learning.based methods for noise reduction under the constraints of modern hearing aids. This involves a low latency processing as well as the employing a hearing instrument-grade filter bank. Another important aim is the robustness of the developed methods. Therefore, the methods will be applied to real-world noise signals recorded with hearing instruments.

2018

-

Deep Learning Applied to Animal Linguistics

(FAU Funds)

Project leader: ,

Term: April 1, 2018 - April 1, 2022

Acronym: DeepALDeep Learning Applied to Animal Linguistics in particular the analysis of underwater audio recordings of marine animals (killer whales):For marine biologists, the interpretation and understanding of underwater audio recordings is essential. Based on such recordings, possible conclusions about behaviour, communication and social interactions of marine animals can be made. Despite a large number of biological studies on the subject of orca vocalizations, it is still difficult to recognize a structure or semantic/syntactic significance of orca signals in order to be able to derive any language and/or behavioral patterns. Due to a lack of techniques and computational tools, hundreds of hours of underwater recordings are still manually verified by marine biologists in order to detect potential orca vocalizations. In a post process these identified orca signals are analyzed and categorized. One of the main goals is to provide a robust and automatic method which is able to automatically detect orca calls within underwater audio recordings. A robust detection of orca signals is the baseline for any further and deeper analysis. Call type identification and classification based on pre-segmented signals can be used in order to derive semantic and syntactic patterns. In connection with the associated situational video recordings and behaviour descriptions (provided by several researchers on site) can provide potential information about communication (kind of a language model) and behaviors (e.g. hunting, socializing). Furthermore, orca signal detection can be used in conjunction with a localization software in order to provide researchers on the field with a more efficient way of searching the animals as well as individual recognition.

For more information about the DeepAL project please contact christian.bergler@fau.de.

2023

Conference Contributions

- , , , :

DeepFilterNet: Perceptually Motivated Real-Time Speech Enhancement

INTERSPEECH (Dublin, Ireland, August 20, 2023 - August 24, 2023)

In: INTERSPEECH 2023 2023

Open Access: https://arxiv.org/abs/2305.08227

BibTeX: Download - , , , :

Deep Multi-Frame Filtering for Hearing Aids

INTERSPEECH (Dublin, Ireland, August 20, 2023 - August 24, 2023)

In: INTERSPEECH 2023 2023

Open Access: https://arxiv.org/abs/2305.08225

BibTeX: Download

2022

Journal Articles

- , , , :

Low Latency Speech Enhancement for Hearing Aids Using Deep Filtering

In: IEEE/ACM Transactions on Audio, Speech and Language Processing 30 (2022), p. 2716-2728

ISSN: 2329-9290

DOI: 10.1109/TASLP.2022.3198548

BibTeX: Download

Conference Contributions

- , , , :

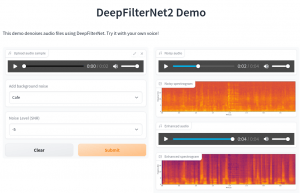

DeepFilterNet2: Towards Real-Time Speech Enhancement on Embedded Devices for Full-Band Audio

International Workshop on Acoustic Signal Enhancement (IWAENC 2022) (Bamberg, September 5, 2022 - September 8, 2022)

In: International Workshop on Acoustic Signal Enhancement (IWAENC 2022) 2022

DOI: 10.1109/iwaenc53105.2022.9914782

URL: https://github.com/Rikorose/DeepFilterNet

BibTeX: Download - , , , :

DeepFilterNet: A Low Complexity Speech Enhancement Framework for Full-Band Audio based on Deep Filtering

ICASSP 2022 (Singapore, May 22, 2022 - May 27, 2022)

In: ICASSP 2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 2022

DOI: 10.1109/icassp43922.2022.9747055

URL: https://github.com/Rikorose/DeepFilterNet

BibTeX: Download

2021

Conference Contributions

- , , , :

LACOPE: Latency-Constrained Pitch Estimation for Speech Enhancement

Interspeech 2021 (Brno, August 31, 2021 - September 3, 2021)

In: Proc. Interspeech 2021 2021

DOI: 10.21437/interspeech.2021-633

BibTeX: Download

2020

Conference Contributions

- , , , , , :

Predicting Hearing Aid Fittings Based on Audiometric and Subject-Related Data: A Machine Learning Approach

Virtual Conference on Computational Audiology (VCCA2020) (Virtual, June 19, 2020 - June 19, 2020)

In: Virtual Conference on Computational Audiology (VCCA2020) 2020

URL: https://computationalaudiology.com/predicting-hearing-aid-fittings-based-on-audiometric-and-subject-related-data-a-machine-learning-approach/

BibTeX: Download - , , , , :

CLCNet: Deep learning-based noise reduction for hearing aids using complex linear coding

IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (Barcelona, May 4, 2020 - May 8, 2020)

In: ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 2020

DOI: 10.1109/icassp40776.2020.9053563

URL: https://rikorose.github.io/CLCNet-audio-samples.github.io/

BibTeX: Download - , , , , :

Lightweight Online Noise Reduction on Embedded Devices using Hierarchical Recurrent Neural Networks

INTERSPEECH 2020 (Shanghai, October 25, 2020 - October 29, 2020)

In: INTERSPEECH 2020 2020

DOI: 10.21437/interspeech.2020-1131

URL: https://arxiv.org/abs/2006.13067

BibTeX: Download

Miscellaneous

- , :

Ubicomp Digital 2020 - Handwriting classification using a convolutional recurrent network

Ubicomp 2020 - Time Series Classification Challenge. (, January 1, 2020 - August 3, 2020)

Open Access: https://arxiv.org/abs/2008.01078

BibTeX: Download

(Working Paper) - , , , :

CLC: Complex Linear Coding for the DNS 2020 Challenge

(2020)

Open Access: https://arxiv.org/abs/2006.13077

URL: https://github.com/Rikorose/clc-dns-challenge-2020

BibTeX: Download

(Working Paper)

2019

Journal Articles

- , , , , , , , :

ORCA-SPOT: An Automatic Killer Whale Sound Detection Toolkit Using Deep Learning

In: Scientific Reports 9 (2019), p. 1-17

ISSN: 2045-2322

DOI: 10.1038/s41598-019-47335-w

BibTeX: Download

Conference Contributions

- , , , , , :

Segmentation, Classification, and Visualization of Orca Calls Using Deep Learning

International Conference on Acoustics, Speech, and Signal Processing (ICASSP) (Brighton, May 12, 2019 - May 17, 2019)

In: ICASSP 2019 - 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 2019

DOI: 10.1109/ICASSP.2019.8683785

URL: https://ieeexplore.ieee.org/abstract/document/8683785

BibTeX: Download - , , , , , , , :

Deep Representation Learning for Orca Call Type Classification

22nd International Conference on Text, Speech, and Dialogue, TSD 2019 (Ljubljana, September 11, 2019 - September 13, 2019)

In: Kamil Ekštein (ed.): Text, Speech, and Dialogue, 22nd International Conference, TSD 2019, Ljubljana, Slovenia, September 11–13, 2019, Proceedings 2019

DOI: 10.1007/978-3-030-27947-9_23

BibTeX: Download

| Type | Title | Status |

|---|---|---|

| MA thesis | Binary Neural Networks for Enhanced Processing in Hearing Aids | finished |

| MA thesis | Distillation Learning for Speech Enhancement | finished |

| MA thesis | Deep Learning-based Pitch Estimation and Comb Filter Construction | finished |

| MA thesis | Deep Learning based Beamforming for Hearing Aids | finished |

| MA thesis | Predicting Hearing Aid Fittings Based on Audiometric and Subject-Related Data: A Machine Learning Approach | finished |

| MA thesis | Deep Learning-based Spectral Noise Reduction for Hearing Aids | finished |

| MA thesis | Multi-Task Learning for Speech Enhancement and Phoneme Recognition | finished |

| Project | Development of a deep learning-based phoneme recognizer for noisy speech | finished |

| BA thesis | Development of a pre-processing/simulation Framework for Multi-Channel Audio Signals | finished |