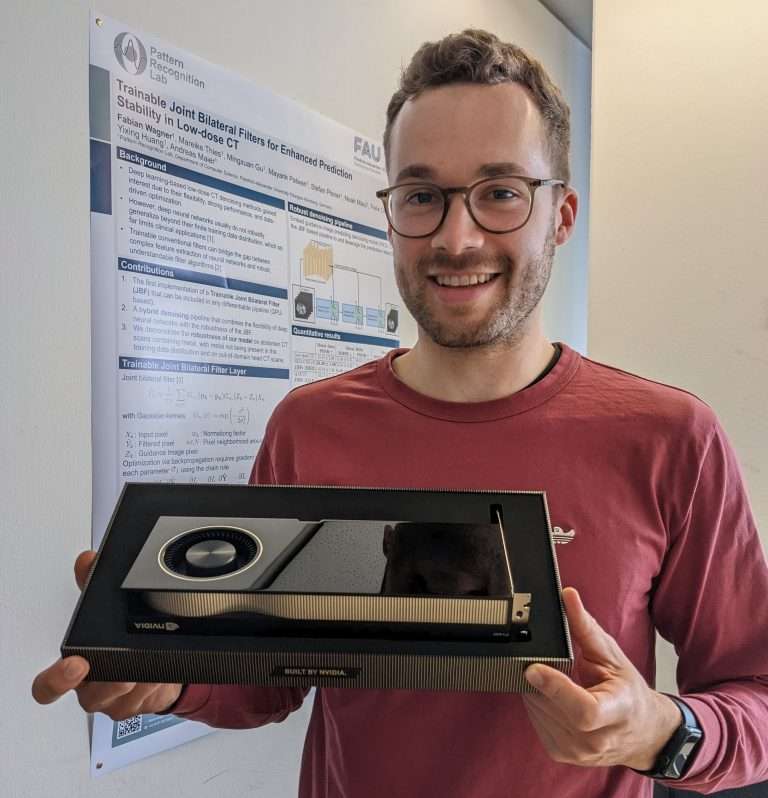

NewsPattern Recognition Symposium – July 25th to 29th 2022

With great excitement, we announce our Pattern Recognition Lab (PRL) conference. From July 25th to 29th 2022, the members of the PRL will share their latest research at our traditional event. Timetable PDFDate: 25th to 29th of July, 2022Zoom link for oral presentations: https://fau.zoom.us/j/61360917228?pwd=azZKY2xDWk5ONWNQc01XbzIrNWZlQT09With great excitement, we announce our Pattern Recognition Lab (PRL) conference. From July 25th to 29th 2022, the members of the PRL will share their latest research at our traditional event. Timetable PDFDate: 25th to 29th of July, 2022Zoom link for oral presentations: https://fau.zoom.us/j/61360917228?pwd=azZKY2xDWk5ONWNQc01XbzIrNWZlQT09